Spatial Controller

Spring 2019

2 Weeks

In this project I build a method that allows the iPhone to become a powerful 3D input device that can manipulate and interact with digital 3D environments.

Problem Space

With the advent of AR, VR, and other technologies, 3D interfaces are becoming more common to see. However, when viewed on a 2D computer screen with a 2D input tool (mouse), we are using suboptimal methods to interact with digital 3D content.

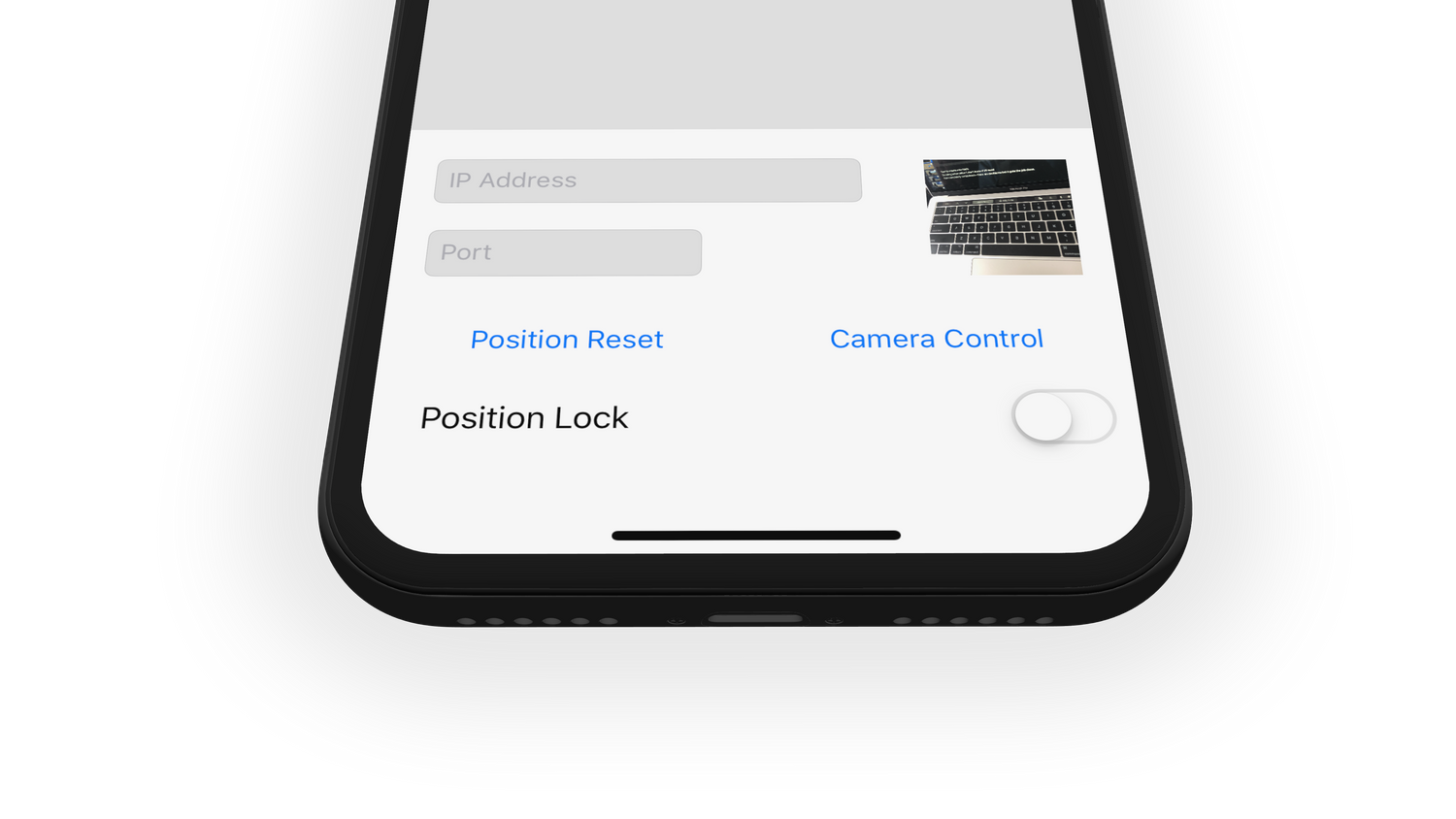

Input App

In order to prototype this interaction paradigm, I built a custom app with Swift that streamed real-time position and orientation data to my computer.Debug UI version of the app I developed.

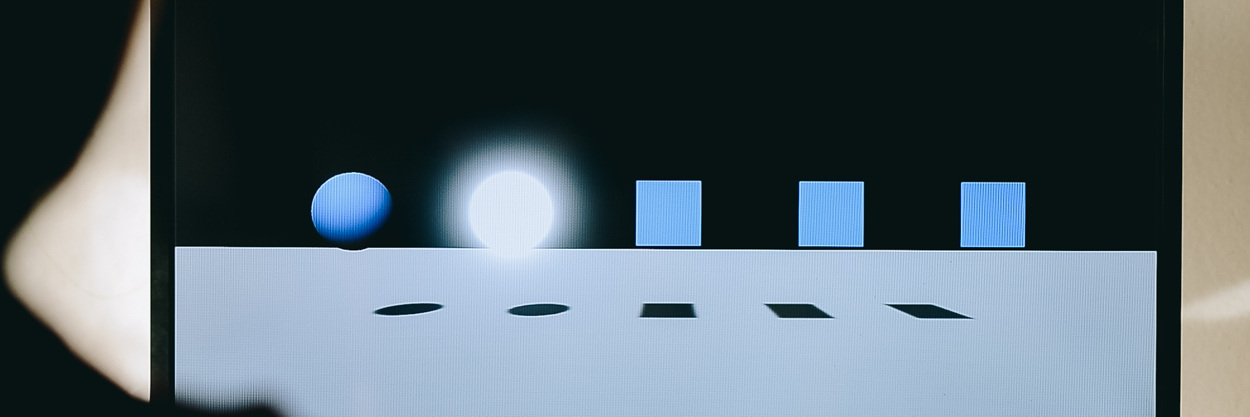

Example of cordless positional data streaming in Unity 3D

Prototypes

Here are a set of quick interaction explorations to see how this technique can be used in more creative and meaningful ways. Through these experiments I was trying to learn the right kind of tasks could benefit the most from spatial input/ spatial companion apps.Interaction 1: Haptic Zones

In each of these grey volumes, continuous haptics are being triggered at varying strengths.

Each of the grey volumes is embedded with a different ‘haptic viscocity’ with haptic feedback at varying frequencies and intensities. This passive information could be extremely rich when objects have different characteristics, especially as our haptic vocabulary becomes more diverse in range.

Interaction 2: Camera Control

Camera rotation based on orientation of iPhone.

Orbiting in 3D software can be a distraction in a user's flow, and having a more natural way to change my camera angle allows me to be that much more focused on what I’m trying to do. This might work in a viewing setting, but less effective while authoring.

Interaction 3: Spatial 3D Touch

Using the iPhone 3D Touch capabilities to manipulate these digital 3D objects in different ways.

Here I map the force of the touch value to some attribute in the digital 3D object. I started asking questions like: “Is this interaction an appropriate metaphor for making something brighter? faster?”

Another important detail to highlight is that there are haptics in this interaction. A haptic feedback is triggered when the digital cursor collides with and exits a digital object. It makes your brain believe that there are “invisible objects” in reality!

Interaction 4: Collaborative Annotations

A video highlighting the back and forth interaction between the digital 3D scene and the device itself.

I created a tool that allows single or multiple users to use their own devices to nagivate and locally place thoughts in a 3D scene.

From various demos, I found that when one isn’t concerned as much about perfectly placing and orienting a comment, they have an easier time stringing thoughts and being more focused in this headspace. This felt like an appropriate use of this technique.